I’m changing my post format a tiny bit. I’ll be starting with a quick summary, so that the first thing to appear on the blog’s main page isn’t the project’s screenshot caption without the screenshot. I’m also renaming Project Outlook to Closing, because often what I write there has little or nothing to do with the project.

I’m also dropping the project stats, since so frequently the project is either nonfunctional at the end of the month due to a WIP codebase change, or I don’t have any new content which would affect the play length in any meaningful way. The line count is also deceptive, since it includes whitespace, and I have a pretty verbose Lua writing style on top of that.

Like in December, I once again I find myself in the middle of a change which puts the end-of-month devlog in an awkward spot. I’ve more or less accomplished what was left hanging at the end of the year. This month’s spill-over task is a heavy rewrite of the build system, migrating from Python + Pillow + standalone LuaJIT to what is essentially a second LÖVE application.

Devlog

Screenshot taken while debugging the scene shader. This is what the action scene looks like without palettization.

I have two short videos: a quick run-through of the sprite effects test room, and a quick demo of interpolating between two indexed palettes. I’ve also taken all of my previously unlisted WIP videos and made them public. I’m not sure why I had them unlisted originally, but if you want to take a peek at how the project has changed over time, now you can without having to dig through the associated devlog posts.

Summary

- Completed draw tests from December

- Rewrote HUD as a widget

- Got sprite rendering and shader effects operational with SpriteBatches

- Implemented lightweight “sprite-particle” entities

- Enhanced pixel dissolve shader effect

- Implemented “bright” shader effect

- Implemented setScissor()-style cropping as a pixel shader effect

- Gave up on trying to prevent moving platforms from jittering while the player stands on them

- Enabled strict garbage collection management based on code from 1BarDesign’s batteries library

- Started rewrite of build system, using LÖVE as a base

Draw Test

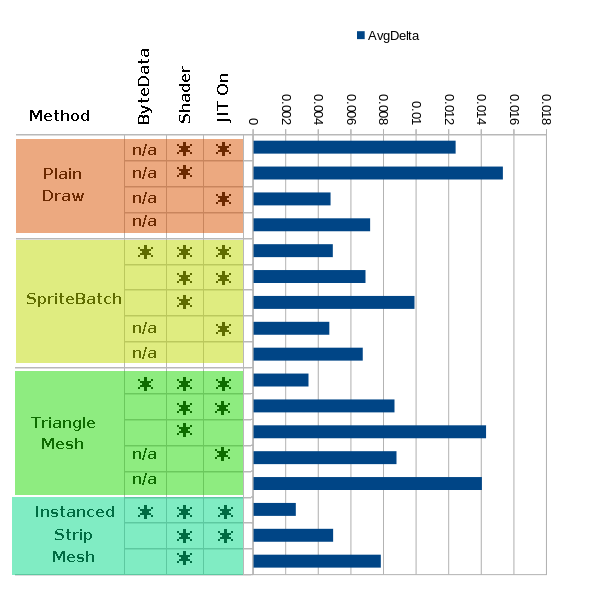

Last month, I started writing a test program to benchmark different methods of rendering 2D objects to the screen. I finished this up and ran a simple benchmark on every mode: “plain” calls to love.graphics.draw(); SpriteBatches; Triangle Meshes with a Vertex Map to condense every triangle-pair down to 4 vertices from 6; and an Instanced Mesh quad with per-instance vertex attributes. The test involved drawing 8192 sprites every frame with VSync off. The score value is the average time spent rendering one frame over a period of 120 seconds (with 20 seconds of warm-up time.)

Here are descriptions of each subcategory:

Shader: the mode uses a shader with four per-sprite floating point variables. These variables are added to the output color as a mock activity. Rows which don’t have ‘shader’ ticked use the default LÖVE shader, and all uniform / attribute upload logic is skipped.

ByteData: LÖVE provides generic data objects which you can write to via pointers with the LuaJIT FFI. These data objects can be passed into mesh:setVertices() instead of using a table. If the ByteData-manipulating FFI calls are compiled, it’s a huge performance boost. If not, then it’s slower than just working with a table.

JIT On: Whether the LuaJIT tracing compiler was enabled for the test run. (NOTE: I’ve had some misconceptions about how LÖVE initializes JIT, which I realized after these tests. More on this later.)

I have omitted some modes where ByteData is used while JIT is disabled. Their frame-times were so long that it made reading the chart difficult. Basic scaling, flipping and rotation were implemented for the mesh modes, though not in a very robust manner. Shearing was omitted in all Mesh modes.

The instanced mesh is the fastest, and by a wide margin. I believe it comes down to less vertex data being streamed to the GPU — one vertex per sprite instead of four duplicated sets. This was a pretty basic use of instancing, with no use of per-instance IDs.

Anyways, in hindsight, 8192 streamed sprites is an unrealistic number of in-game visual entities, so these numbers probably don’t mean a whole lot for my particular use case. Conclusion: manual SpriteBatches are good enough, and an improvement over plain draw calls with broken autobatching.

Manual SpriteBatching

With that done, I resumed work on implementing sprites as SpriteBatches.

As I mentioned in December, the game’s tilemap shader effects are per-layer, not per-tile, whereas the majority of sprite effects are per-sprite. It would be possible to attach per-tile vertex attributes, but I don’t have the tools to make good utilization of effects which apply to each tile separately. The logic to update individual tiles could introduce a lot of overhead as well. This is something I can revisit in the future if such effects are desired.

Anyways, tilemap layer effects were implemented as uniforms. Sprite effects were uniforms, and now they’re per-vertex attributes. The game alternates between drawing sprites and tilemap layers in this fashion:

- Sprites behind everything

- Tilemap backgrounds

- Sprites behind the stage

- Tilemap stages

- Sprites behind the foreground

- Tilemap foregrounds

- Sprites in front of everything

I tested a few ways of handling this, including writing separate shaders for tilemaps and sprites. I ended up using a single shader with uniforms for tilemap effects, and varying attributes for sprite effects. One of these sets of values is always zeroed out. Tilemaps do not have vertex attributes attached, so all floating point attributes default to 0. When preparing to draw a sprite-layer, all uniforms are zeroed out before the call to love.graphics.draw(). Then, in the vertex shader, uniform and attribute versions of the effects are added together and passed on to the pixel shader.

Everything seems good so far. I have a limitation of 1024 sprites per layer because I haven’t gotten around to writing the code to enlarge the attached attribute meshes in the event that this quantity is surpassed. In a typical session, there are at most a few hundred sprites active at a time, so I think it will be okay for a while.

Sprite Cropping Revisited

Earlier, I implemented axis-aligned cropping on a per-sprite basis with scissor boxes. These aren’t compatible with individual sprites within a SpriteBatch (they would apply to all sprites drawn as part of the batch), so I looked into ways of doing this by messing with the quads attached to sprites. Scissor boxes were a pretty straightforward solution which behaved like a floating window over the sprite. Editing quads turned out to be more complicated, due to the fact that animation frames are trimmed prior to being added to the texture atlas. As a result, the vast majority of animations have frames with varying dimensions.

Frustrated, I settled on a compromise: allow sprites to have custom quads, and deactivate the sprite’s ability to animate when this state is active. It wasn’t as effective as the old scissor box method, but I figured it would be enough for effects where objects tear apart in pieces.

A day or two later, I realized I could pass cropping boundaries into the pixel shader and get nearly the same behavior as scissors. Oops. So now I have two methods for cropping. It’s worth keeping the custom-quad method, as they should have less pixel fill-rate overhead.

The crop check in the pixel shader looks like this, located at the top of the function:

// (fx_crop is a vec4. z and w are x2 and y2.)

if(screen_coords.x < fx_crop.x

|| screen_coords.y < fx_crop.y

|| screen_coords.x >= fx_crop.z

|| screen_coords.y >= fx_crop.w) {

discard;

}

The crop can be debugged by messing with the RGBA output:

// Debug!

/*

if(screen_coords.x < fx_crop.x) {

color.r = 0.0;

}

if(screen_coords.y < fx_crop.y) {

color.g = 0.0;

}

if(screen_coords.x >= fx_crop.z) {

color.b = 0.0;

}

if(screen_coords.y >= fx_crop.w) {

color.a = 0.25;

}

*/

Sprite-Particles

I’ve been using an actor called gp_sprite to implement throwaway visual effects such as impact graphics. Since I wrote it, actors have become more complex and have more overhead. Instead of generating a bunch of actors to implement these throwaway sprites, I made a separate per-scene particle table which provides similar functionality. Each sprite-particle contains a sprite structure and a few behavior rules, which are mostly taken from gp_sprite.

I looked at every instance in the codebase of gp_sprite being spawned, and migrated about 90% of them to sprite-particles. Some wouldn’t be a good fit because they rely on actor-pinning logic, which sprite-particles don’t have. Another quirk of my implementation is that for a given layer + priority setting, sprite-particles are always appended to the lists after all actor sprites are sorted. So given an actor-sprite and a sprite-particle at equal layer + priority settings, the sprite-particle will always appear in front of the actor-sprite.

I was curious about using a LÖVE ParticleSystem as a way to generate and draw tons and tons of small square sparks. I found that it can indeed emit hundreds to thousands of particles without totally tanking the framerate. However, actually seeing the effect in-game, it looked a bit out of place, so I backed it out.

Shader FX: Improved Pixel-Dissolve

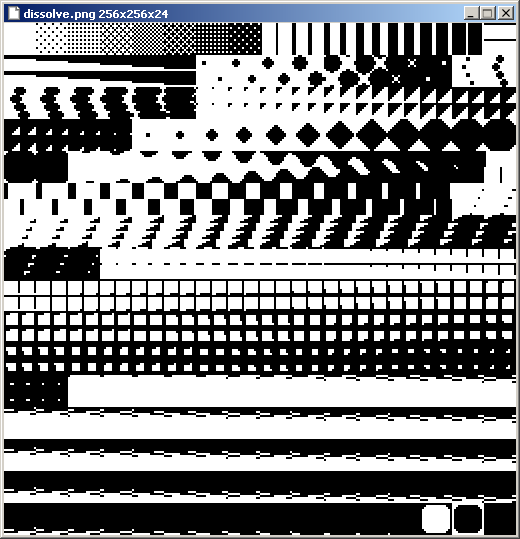

The current dissolve texture.

Pixel-dissolve was a shader effect I implemented as a way to apply a kind of dithered transparency mask to sprites and background layers. The masks were implemented as an 8×8 ArrayImage, with each step of the dissolution generated via nested for loops. I increased the ArrayImage size to 16×16, redrew the dissolve frames by hand, and added some additional variables to allow sprites and tilemaps to offset the dissolve mask at the screen-coordinate level.

Shader FX: ‘Bright’

I was inspired by this page on the Sega Genesis’s Shadow + Highlight feature to add a shader variable that brightens or darkens the pixel output (in this case, a simple addition to each RGB value sampled from the texel.) Setting fx_bright to a sufficiently high value makes all non-transparent pixels white. This can then be tinted to other colors with the standard RGBA variables.

I think the ramping of this effect may be off due to not respecting gamma correction. It should be OK for simple blinking effects. Maybe the strange cut-off (since it’s an addition, not multiplication) would look interesting as well when applied to certain kinds of colors. I’ve been considering this effect for at least a couple of months now, and it’s nice to finally see it in action.

Rewriting the HUD / Status Bar

The original status bar was written in a per-actor draw() callback, a feature which no longer exists. It was migrated to a “function sprite”, which would call an arbitrary drawing function as part of the scene-draw process. This, too, is no longer a thing. Now, it’s implemented as a widget in the controller scene, where things like the options menu and title art reside. (This is why the status bar elements are not Virtual Boy red in the screenshot at the top of this post.)

Even though it’s in front of the 4th wall, the invisible action_manager actor is still responsible for updating its state. To avoid a situation where an action scene actor is directly messing with the controller scene, I made a 3rd party toolkit module where action_manager can write new state and status_bar can read from it when it’s time to update and draw.

A Case of the Pixel Jitters

This has been a recurring topic in my devlog posts. In a pixel art game with sub-pixel precision, the player standing on a moving platform will often cross pixel column boundaries at a different time than the platform itself, unless the platform is moving one base-pixel per tick, or some workaround is implemented. What I have tried unfortunately messes with the simulation: only moving the game objects at intervals which match the base scale. That essentially worked when the game was rendered to a 480×270 canvas and scaled up, but now that sprites are rounded to the nearest screen pixel, there is no one single scale.

At this point, I have kind of stopped caring. Vertical movement still looks OK because the player’s sprite is basically snapped to the same alignment as the platform. Maybe I could make horizontal platforms vibrate a bit, or do something else to distract from the issue. RNavega’s pixel art interpolation shader might be a solution, if I could get it to work with my indexed palette implementation (or if I just dumped palettes entirely), but I’m pretty sure that requires a 1 pixel perimeter of blank pixels around each sprite, so quad-cutting would be incompatible.

Garbage Step

Back in July 2020, I pulled in and modified a function from 1BarDesign’s batteries library which runs the Lua garbage collector in short steps. I left it commented out at the time because I wasn’t sure about the project’s memory needs. I think the game is generally better at handling memory at this point, so I’ve enabled it again. Lua’s memory counter will increase as a result of something as innocuous as converting a number to a string, which you need to do in order to display the current memory usage. Running the GC in tiny steps should prevent substantial build-up of references which must eventually be collected.

I added a cooldown to limit the frequency of steps, defaulting to 1/30th of a second, so the number of times this runs should be roughly similar if the game is running at 30 FPS or 500 FPS. The function is called in love.update(), so it doesn’t affect the application startup process.

Here’s what the Lua 5.1 manual has to say regarding garbage collection (s2.10), and the collectgarbage() command itself.

Indexed Palette Changes

I rewrote the implementation of indexed palettes. Overall, there are three changes:

- Before, each scene context had its own palette of 256 colors, and there was a theoretical maximum of 256 palettes. (I thought that, at most, about a dozen or so would ever be used.) Now, all scenes share a single global palette. I made this change because the only scenes that really used palettization were the action scene and the background matte. Nearly everything else (menu system, etc.) uses the default LÖVE shader with no effects. There was also a hack to overwrite the matte palette table with the one belonging to the action scene, so multiple palettes served no real purpose and just made indexed colors harder to manage.

- I’m using a slightly more efficient method of updating the palette data. Originally the palette was sent to the GPU as a canvas, and updated by calling love.graphics.setColor() and love.graphics.points() for every changed pixel. Now an ImageData is updated using mapPixel(), and the resulting data is uploaded to the texture using replacePixels().

- Palette tables stored each color as a separate table. I flattened them out so that they are stored as one array table ({r1, g1, b1, a1, r2, g2, b2, a2}, etc.) I hope I don’t end up regretting this decision.

I also wrote a function to interpolate between two indexed palettes and paste the result into the live global palette. A demonstration can be seen in the second video I posted for the month.

Revisiting jit.off()

While reading the LÖVE 11.4 source code, I noticed that some functions are selected at boot time depending on the state of the jit module. If JIT is active, then FFI implementations of those functions are used. If not, then standard Lua C API versions are used instead. The FFI versions are faster when the code is compiled, but slower than the Lua C API versions when it’s not.

Due to a past stability issue, I have been turning off JIT after the project initializes. If I’m reading the source code correctly, that means LÖVE has the FFI versions of functions loaded, even though my game has JIT disabled after startup.

There is also some code in 11.4 to mitigate a LuaJIT allocation issue on ARM64. I grepped the codebase for jit.status and added some print commands to get an idea of the initialization order:

- Do JIT allocation workaround (modules/love/jitsetup.lua)

- Run love.conf()

- Select Lua C API or FFI implementations of the following modules:

- SoundData (modules/sound/wrap_SoundData.lua)

- ImageData (modules/image/wrap_ImageData.lua)

- RandomGenerator (modules/math/wrap_RandomGenerator.lua)

- Math (modules/math/wrap_Math.lua)

- Run main.lua

- Run love.load()

So if I want the Lua C API versions of those functions to be called, I need to call jit.off() in love.conf. And it’s probably good not to mess with JIT state once the application is running. SoundData, RandomGenerator and Math aren’t a huge deal to me, but I am using ImageData:mapPixel() to update indexed palette data, and I would prefer it to use the most appropriate version of that function.

There is one complication with calling jit.off() in conf.lua: startup takes over twice as long. Most of this time is attributable to converting graphics with ImageData methods to a format that the game engine can work with. As a result of these findings, I reviewed which startup tasks could be migrated to the build system.

Revisiting Build Time

I have written a few times about using Python as the base for my game’s build process. There are two reasons why. First: LÖVE 11.x’s filesystem module is only able to write to a save directory determined by the OS and game identity. Second: unless you’re willing to pull in additional libraries, Lua at the command line is very basic. It is that way by design: the expectation is that Lua will be embedded into a host application, and that host will provide the missing features. On the other hand, Python really excels as a command line utility, as not only are there a ton of built-in modules for handling common tasks, the additional libraries are also easier to install.

The tasks I considered shifting to build time were:

- Sprite frame trimming, to get rid of 100% transparent columns + rows

- Palettization of spritesheets and tilesets

- Atlas packing

Trimming and atlas packing require sprite animations and tilesets to be initialized first. It could be done, but I was not enthusiastic at all about converting hundreds of lines of Lua to Python, the latter of which I’m not very fluent in. There is, however, a 3rd party LÖVE library which can do file operations in arbitrary paths: grump’s nativefs. (The original repository has disappeared, but EngineerSmith created a mirror, and the license is MIT, so I as far as I can tell, it’s okay to use.)

With that library in hand, I can convert most or all of the build system to another LÖVE application. LÖVE can run “headless” without a window, and while love.graphics isn’t accessible in this state, love.image is. From experience, I know that ImageData pixel manipulations are considerably faster than Python + Pillow.

After some tests to ensure nativefs will work on my system (it seems quite well-written), I started a new LÖVE application skeleton, and began reviewing what Python scripts would have to be converted over to Lua.

Gale to ImageData

This was the most substantial Python script in the old build system. GraphicsGale image in, PNG out. The developer, HUMANBALANCE, provides a DLL for loading Gale files, but as far as I can tell, it’s not easy to get working outside of Windows.

I think I’m close to completing this and putting it online, but a few issues remain. Here’s where I’m at:

- LÖVE is able to decompress zlib data chunks, so that part is taken care of.

- I had to add XML parsing for the header format (using an existing kind-of-XML parser I wrote for Tiled maps), conversion from Latin 1 to UTF-8, and of course parsing the binary data to tables of numbers.

- My old Python script didn’t correctly account for byte padding, so it only worked correctly if the number of bytes per line was a multiple of 4. By coincidence, all of my images happened to be aligned, so I was able to ignore this for a while. I finally caught the issue and fixed it.

- I redesigned the interface to allow loading just the Gale header and noting the offsets for each data block, instead of loading and decompressing + parsing the entire file all at once. I thought this might be faster in cases where you only need one specific layer out of a multi-frame, multi-layer file. However, it would have to seek through the file for all of the offsets anyways. I dunno. I’ll probably revisit it once I have the build system working again.

- I originally skipped supporting 15bpp and 16bpp images. That should really be taken care of if I put it online.

Closing

And that is where I’m ending the month: feeding tons of small .gal files into a LÖVE application, spitting them back out as PNG files, and checking if they look the same. I expect the build system changes to drag on for another week or two, possibly up to the 15th of February. (Hopefully not.) I’ll make another post around the 28th.